What is Kubernetes?

Kubernetes is an open-source container orchestration engine for automating the deployment, scaling, and management of containerized applications. It is also known as K8s because there are 8 letters between K and s.

Why Kubernetes?

As we saw in the introduction to docker blog containers are a good way to bundle and run your applications. In a production environment, you need to manage the containers that run the applications and ensure that there is no downtime.

For example, if a container goes down, another container needs to start. Wouldn't it be easier if this behavior was handled by a system?

That's how Kubernetes comes to the rescue! Kubernetes provides you with a framework to run distributed systems resiliently. It takes care of scaling and failover for your application, provides deployment patterns, and more. For example: Kubernetes can easily manage a canary deployment for your system. Some of the features include scheduling, scaling, load balancing, fault tolerance, deployment, automated rollouts, and rollbacks, self-healing, etc.

Cluster Architecture

K8s Architecture consists of the master node which manages other worker nodes. Worker nodes are nothing but virtual machines / physical servers running within a data center. They expose the underlying network and storage resources to the application. All these nodes join together to form a cluster providing fault tolerance and replication. These nodes were previously called minions.

Master Node

It is responsible for managing the whole cluster. It monitors the health check of worker nodes and shows the information about the members of the cluster as well as their configuration. For example, if a worker node fails, the master node moves the load to another healthy worker node. Kubernetes master is responsible for scheduling, provisioning, controlling, and exposing API to the clients. It coordinates activities inside the cluster and communicates with worker nodes to keep Kubernetes and applications running.

Components of the Master Node

The control plane's components make global decisions about the cluster (for example, scheduling), as well as detecting and responding to cluster events (for example, starting up a new pod when a Deployment's replicas field is unsatisfied).

API server

The API server is the front end for the Kubernetes control plane. The Kubernetes API server validates and configures data for the API objects which include pods, services, replication controllers, and others.

etcd

Distributed key-value lightweight database. Central database to store current cluster state at any point in time. Any component of Kubernetes can query etcd to understand the state of the cluster so this is going to be the single source of truth for all the nodes, components, and masters that are forming the Kubernetes cluster.

Scheduler

It is responsible for physically scheduling Pods across multiple nodes. Depending upon the constraints mentioned in the configuration file, the scheduler schedules these Pods accordingly.

Control Manager

It is a control plane component that runs controller processes. Logically, each controller is a separate process, but to reduce complexity, they are all compiled into a single binary and run in a single process.

There are many different types of controllers. Some examples of them are:

Node controller: Responsible for noticing and responding when nodes go down.

Job controller: Watches for Job objects that represent one-off tasks, then creates Pods to run those tasks to completion.

EndpointSlice controller: Populates EndpointSlice objects (to provide a link between Services and Pods).

ServiceAccount controller: Create default ServiceAccounts for new namespaces.

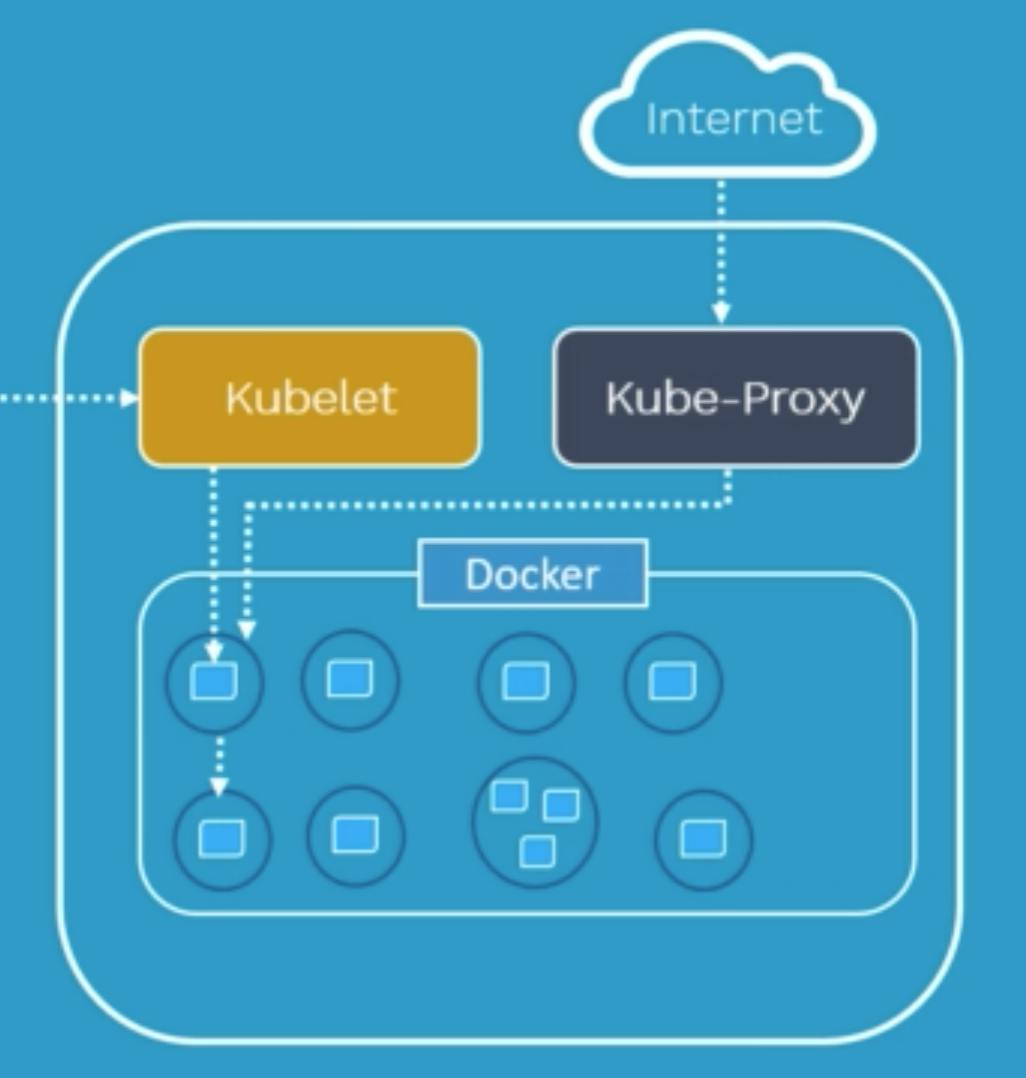

Worker Node

It is any VM or physical server where containers are deployed. Every Node in the Kubernetes cluster must run a runtime such as Docker.

kubelet

It is an agent that runs on each node in the cluster. It makes sure that containers are running in a Pod.

The kubelet takes a set of PodSpecs that are provided through various mechanisms and ensures that the containers described in those PodSpecs are running and healthy. The kubelet doesn't manage containers that were not created by Kubernetes.

kube-proxy

kube-proxy is a network proxy that runs on each node in your cluster, implementing part of the Kubernetes Service concept.

It maintains network rules on nodes. These network rules allow network communication to your Pods from network sessions inside or outside of your cluster.

It uses the operating system packet filtering layer if there is one and it's available. Otherwise, kube-proxy forwards the traffic itself.

Container runtime

It is a fundamental component that empowers Kubernetes to run containers effectively. It is responsible for managing the execution and lifecycle of containers within the Kubernetes environment. Kubernetes supports container runtimes such as containerd, CRI-O.

Pods

Pods are the smallest deployable units of computing that you can create and manage in Kubernetes. It is a group of one or more containers, with shared storage and network resources, and a specification for how to run the containers. A Pod's contents are always co-located and co-scheduled, and run in a shared context.

Containers

Containers are Runtime Environments for containerized applications. We run container applications inside the containers. These containers reside inside Pods. Containers are designed to run Micro-services. For more detailed information, check out my blog on Docker.

Install Tools

kubectl

The Kubernetes command-line tool, kubectl, allows you to run commands against Kubernetes clusters. You can use kubectl to deploy applications, inspect and manage cluster resources, and view logs.

kubectl is installable on a variety of Linux platforms, macOS and Windows. Find your preferred operating system below.

minikube

minikube is a tool that lets you run Kubernetes locally. minikube runs an all-in-one or a multi-node local Kubernetes cluster on your personal computer (including Windows, macOS and Linux PCs) so that you can try out Kubernetes, or for daily development work.

You can follow the official Get Started! guide if your focus is on getting the tool installed.

kubeadm

You can use the kubeadm tool to create and manage Kubernetes clusters. It performs the actions necessary to get a minimum viable, secure cluster up and running in a user friendly way.

You can follow the official Get Started! guide if your focus is on getting the tool installed.

Play-with-k8s

Play-with-k8s provides with a Kubernetes playground which is similar to play-with-docker. A GitHub or Docker account is required. labs.play-with-k8s.com

Conclusion

In conclusion, Kubernetes plays an important role in the realm of container orchestration, offering unparalleled efficiency and scalability. Its robust features redefine application deployment and management, making it a strategic choice for businesses navigating the complexities of modern technology. As we embrace Kubernetes, we pave the way for a more agile, reliable, and innovative future in the world of cloud-native applications.

For further exploration and mastery, check out the official k8s documentation https://kubernetes.io/docs/home/